This project aimed to make traditional lab-based learning in STEM education more accessible by overcoming the limitations of physical infrastructure through a technological solution. I led the end-to-end design process, working closely with a multidisciplinary team from concept through delivery. Based on user testing and analysis, I identified key insights that informed iterative design refinements and guided the direction of next-phase development.

Laboratory education is a critical component of the STEM curriculum, providing students with essential hands-on experience to apply and reinforce what they learn in the classroom. However, offering sufficient lab opportunities remains challenging due to financial and infrastructural constraints, such as the high cost of equipment and operations, limited access, and a lack of instructional resources.

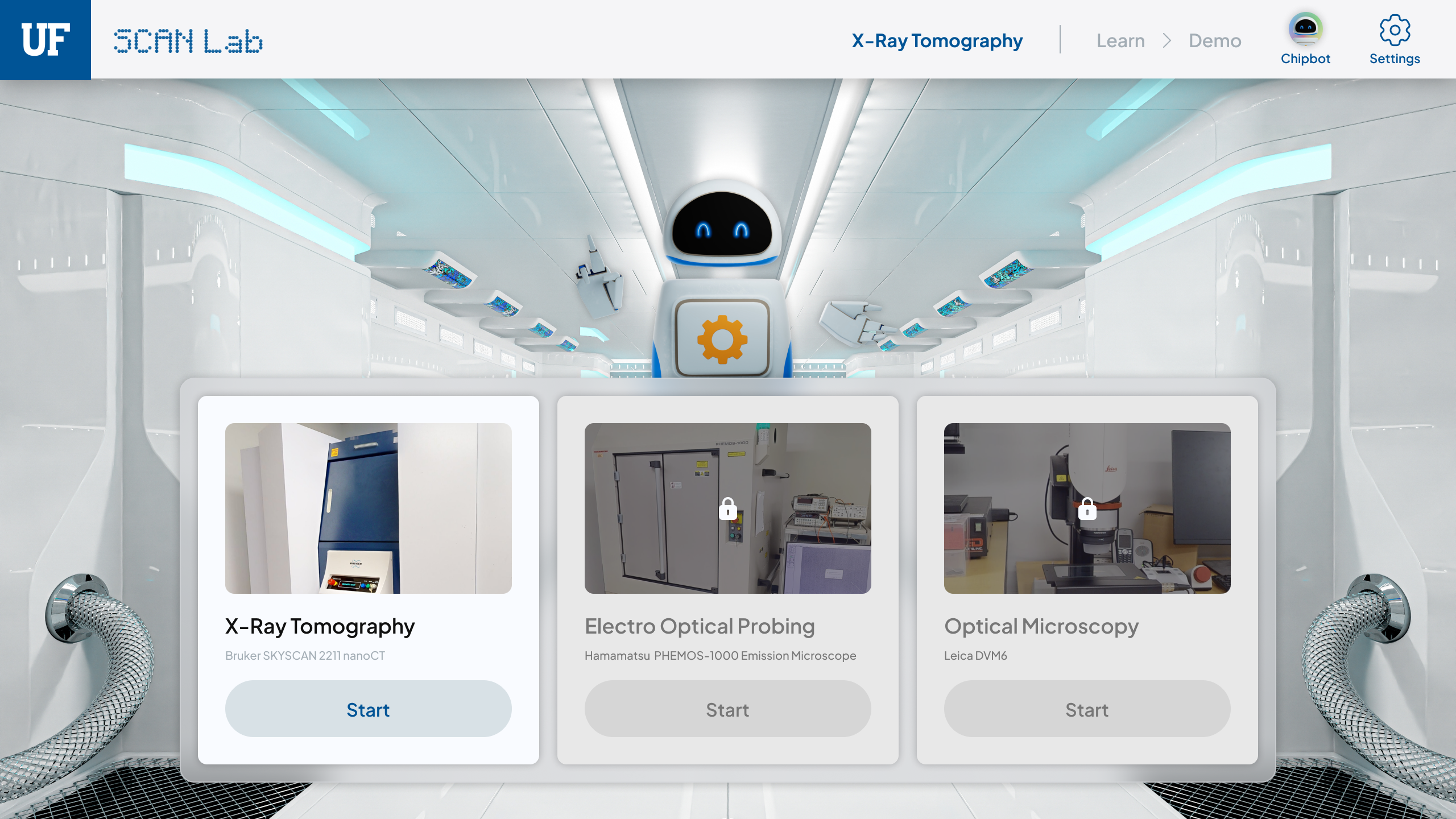

In collaboration with the Electrical and Computer Engineering Department (ECE) at the University of Florida (UF), we aimed to expand lab education opportunities for engineering students by creating a virtual lab training. Specifically, our goal was to transform the SCAN Lab at UF into a virtual environment and develop training modules for its equipment. To make the lab more accessible to students, we chose a web-based format with a VR version planned for future development.

In laboratory-based education, teacher support plays a critical role in enhancing student learning. So it is essential to replicate real-time instructional support provided in traditional labs in a virtual science lab’s remote environment.

Large language models (LLMs) can bridge this gap by providing immediate, personalized feedback and explanations.

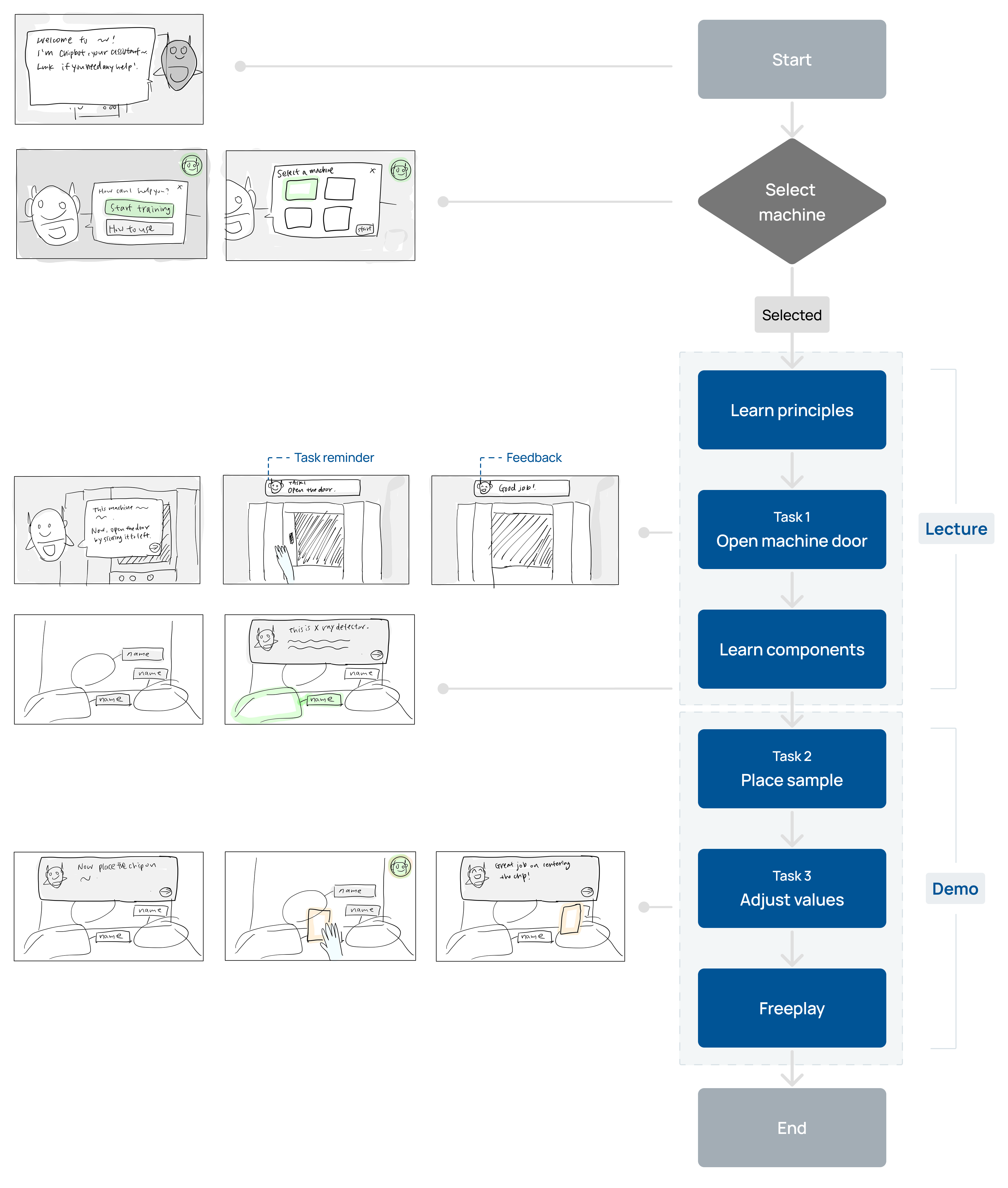

Based on the requirements identified through rounds of discussions with the project team and the ECE team, I created initial sketches and user flows.

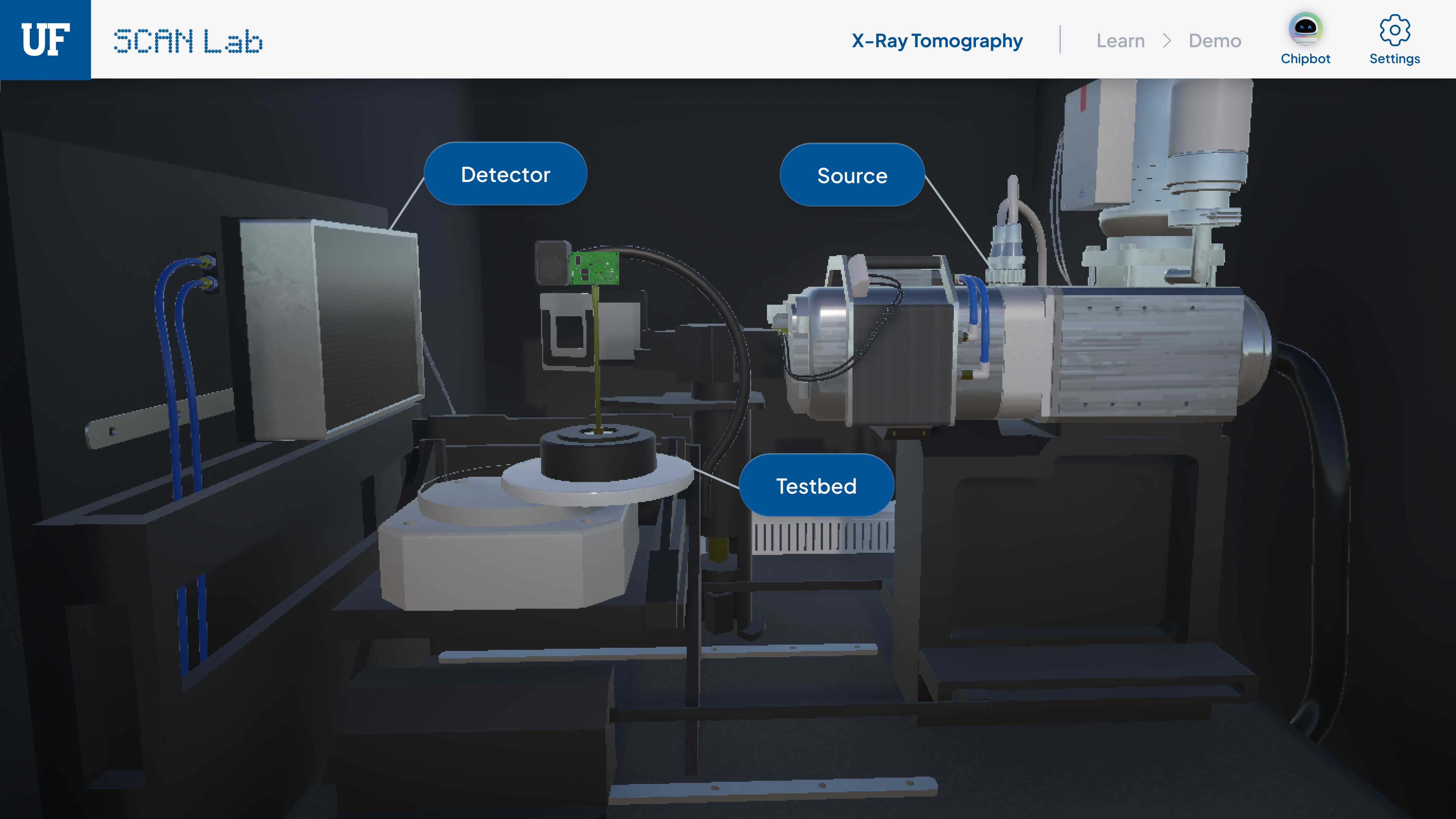

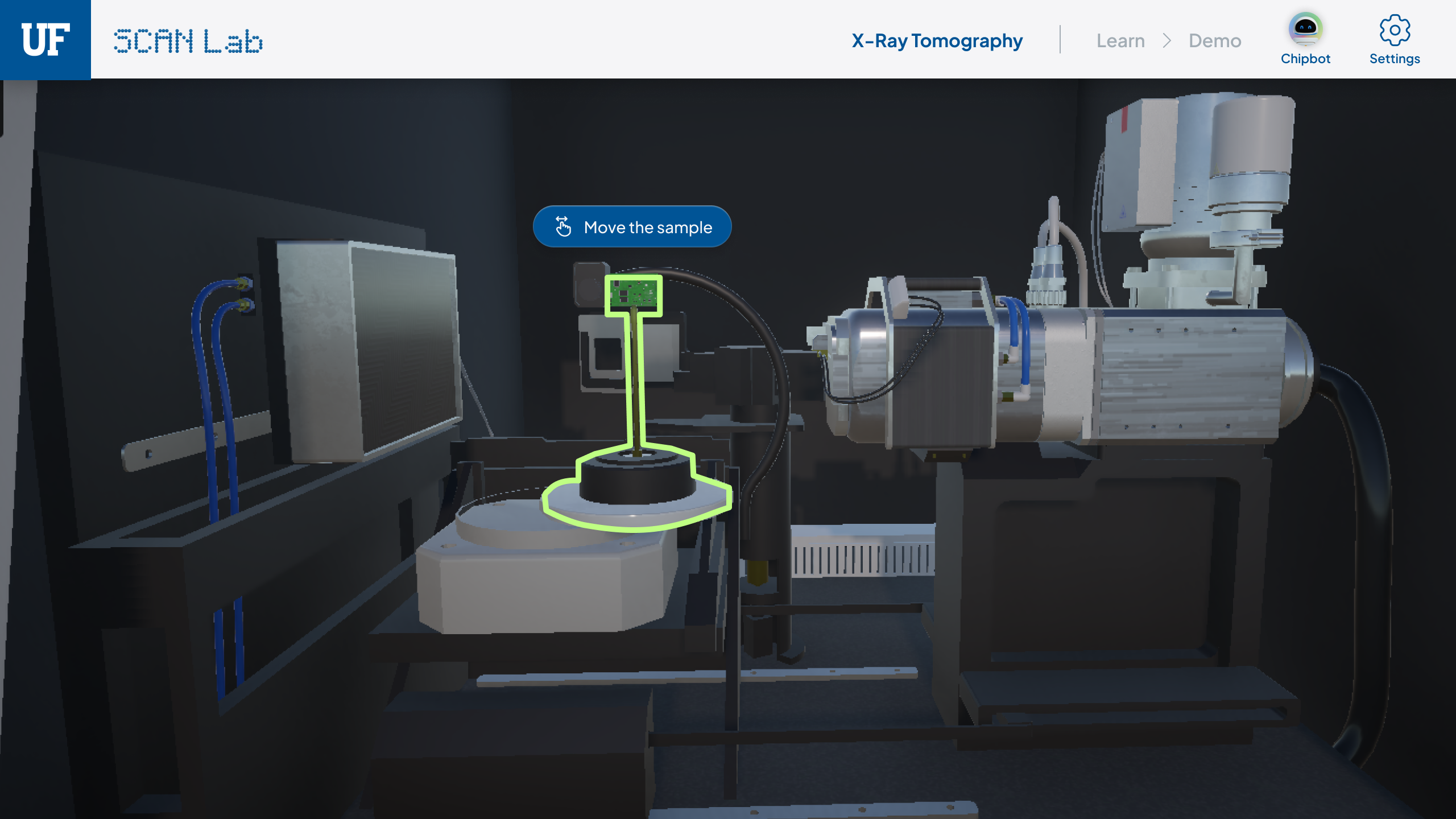

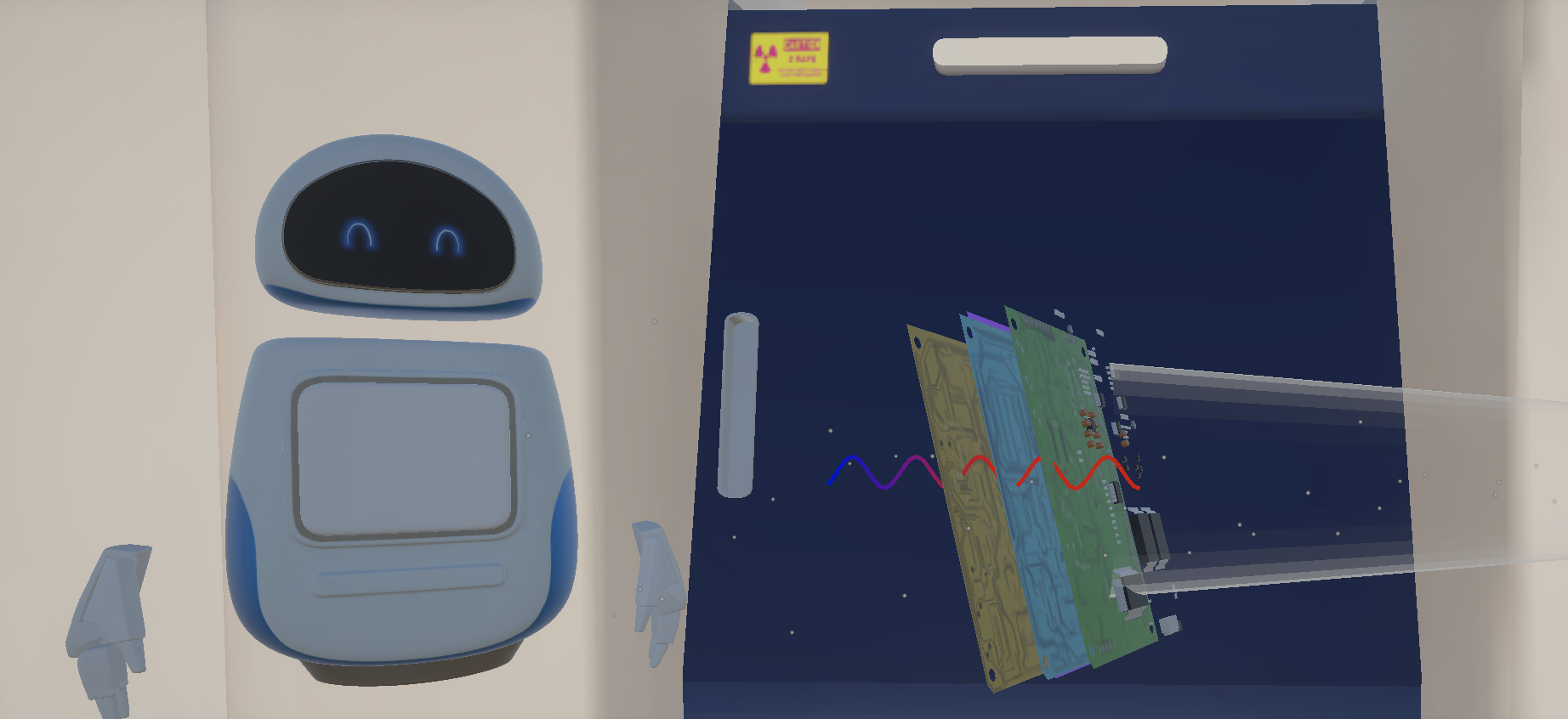

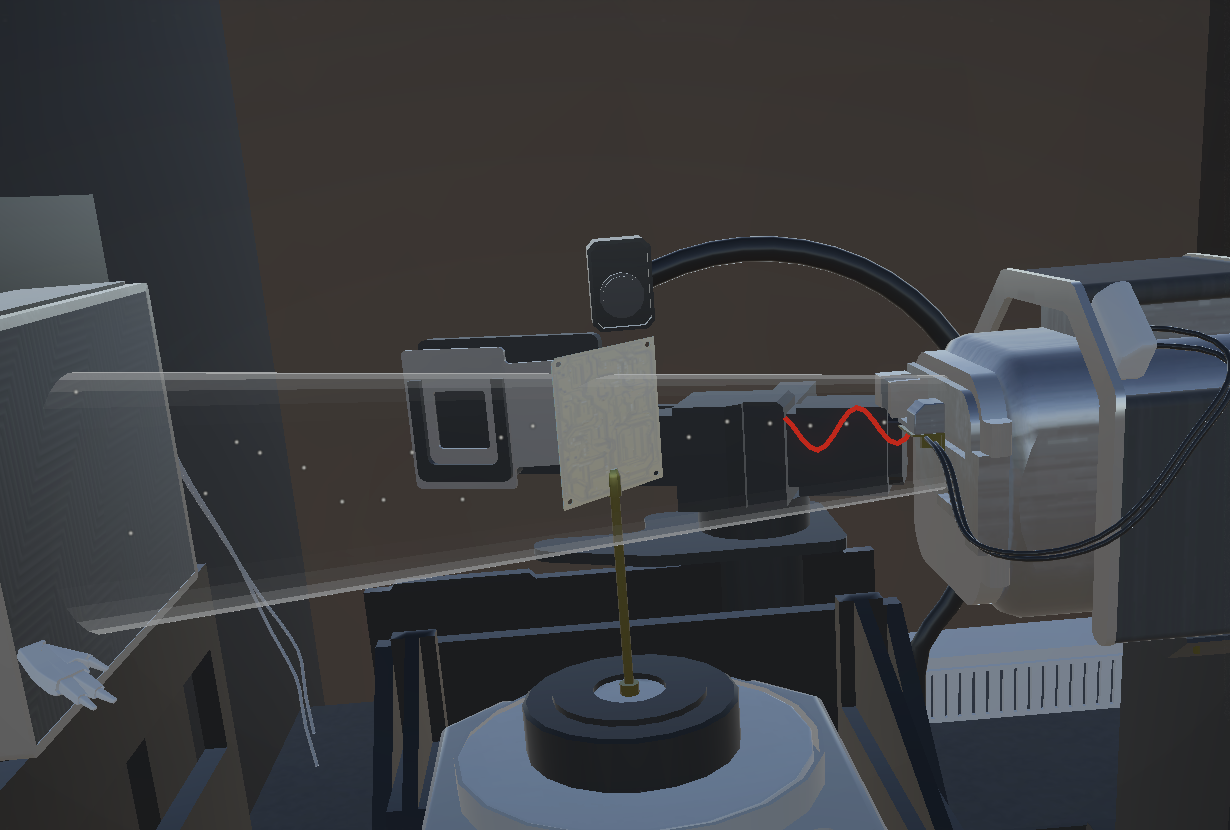

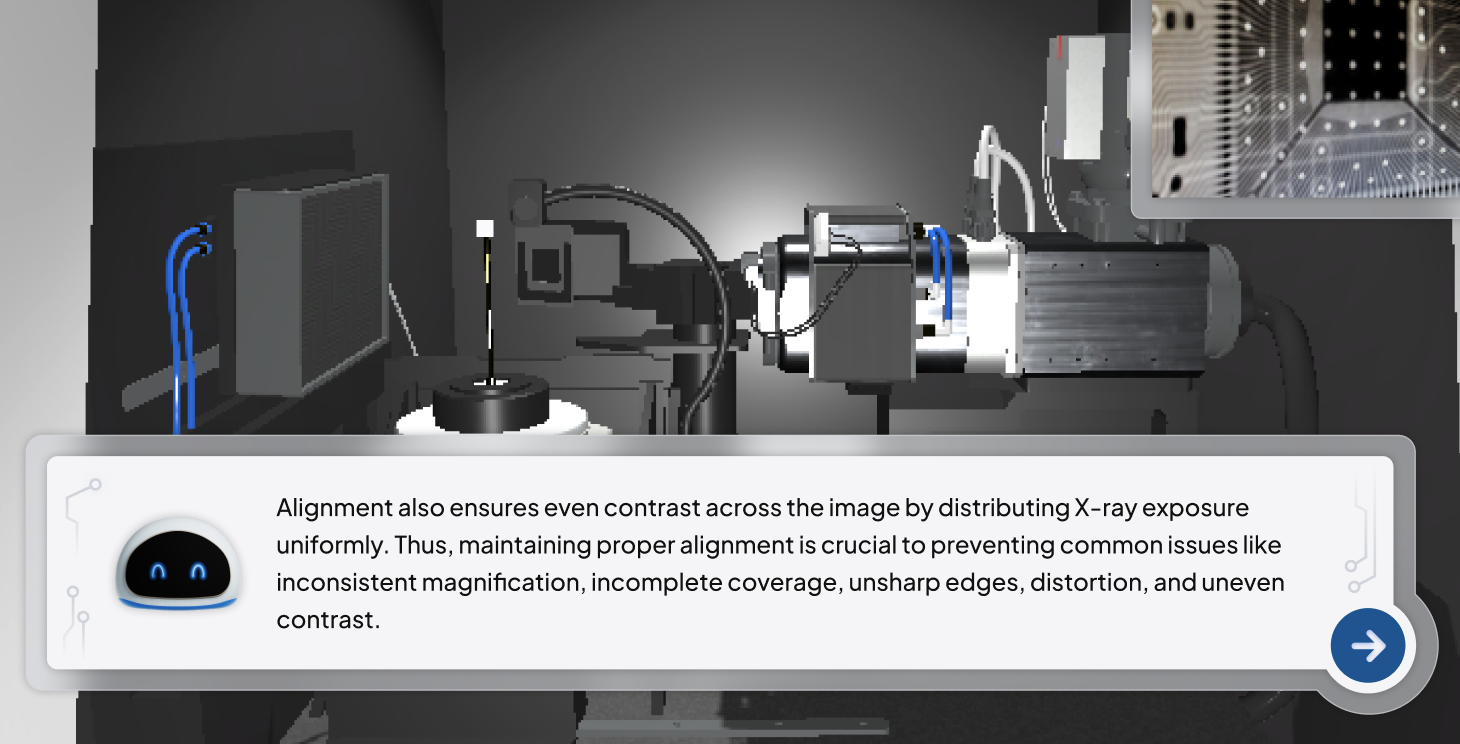

3D-modeled equipment replicates the real machine, including internal components that are normally hidden during operation to help learners better understand how the equipment works.

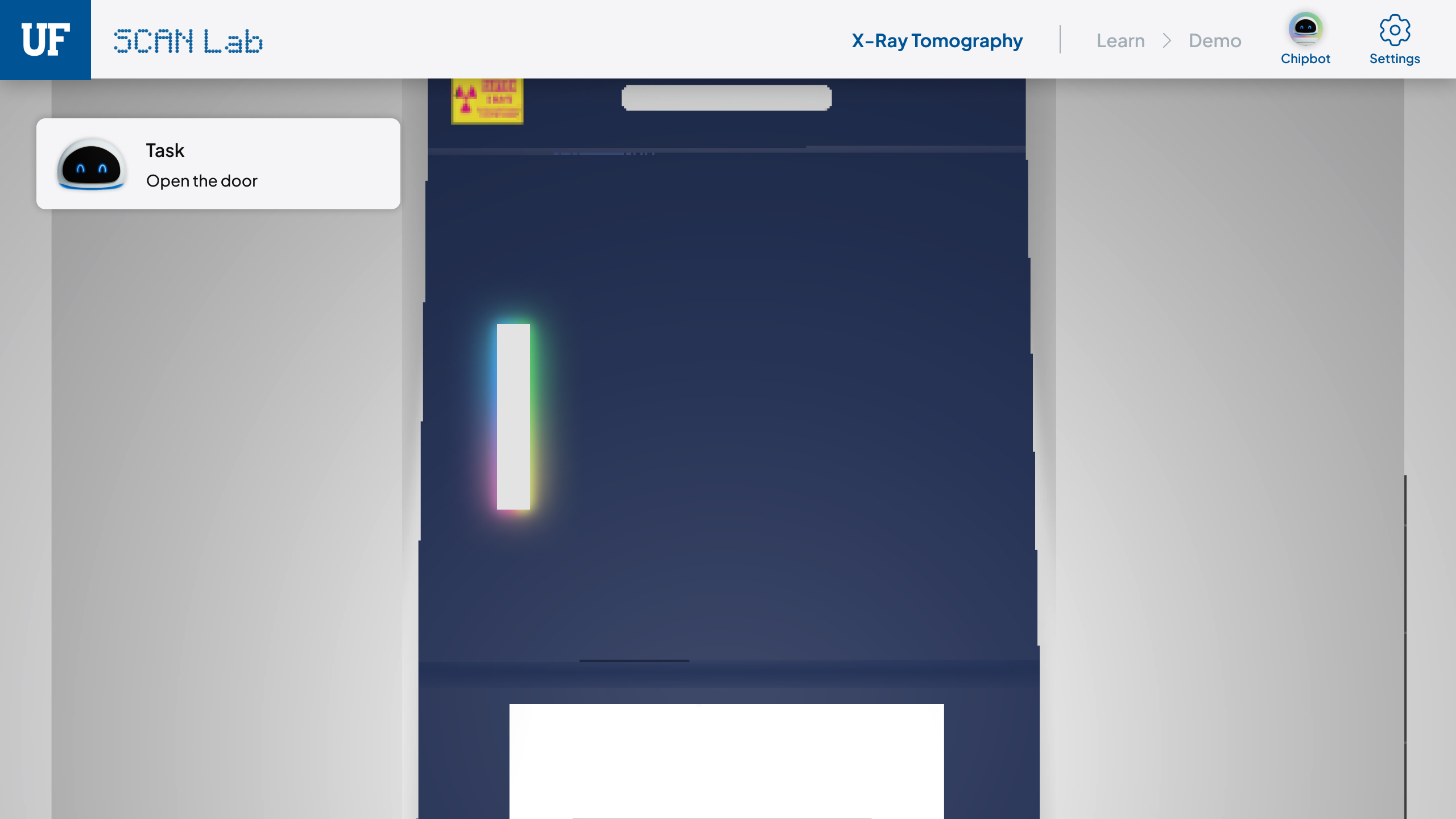

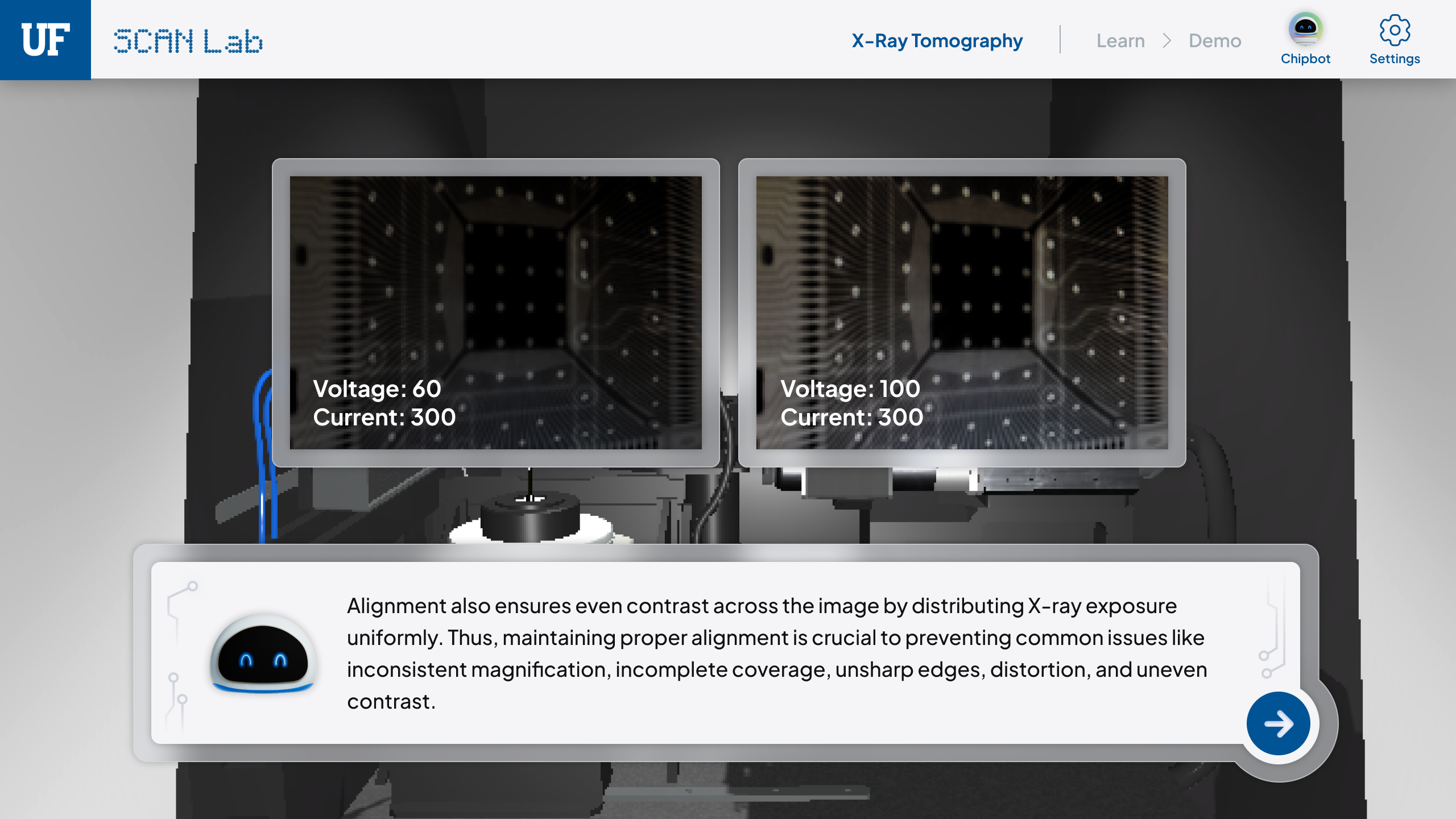

Learners manipulate different parameters firsthand to gain practical experience in a realistic, risk-free environment.

Along with text and auditory explanations, various visual materials such as 3D chip models and X-ray scans are provided to enhance understanding. Invisible elements like those revealed through X-rays are rendered into tangible visuals to help learners grasp abstract concepts.

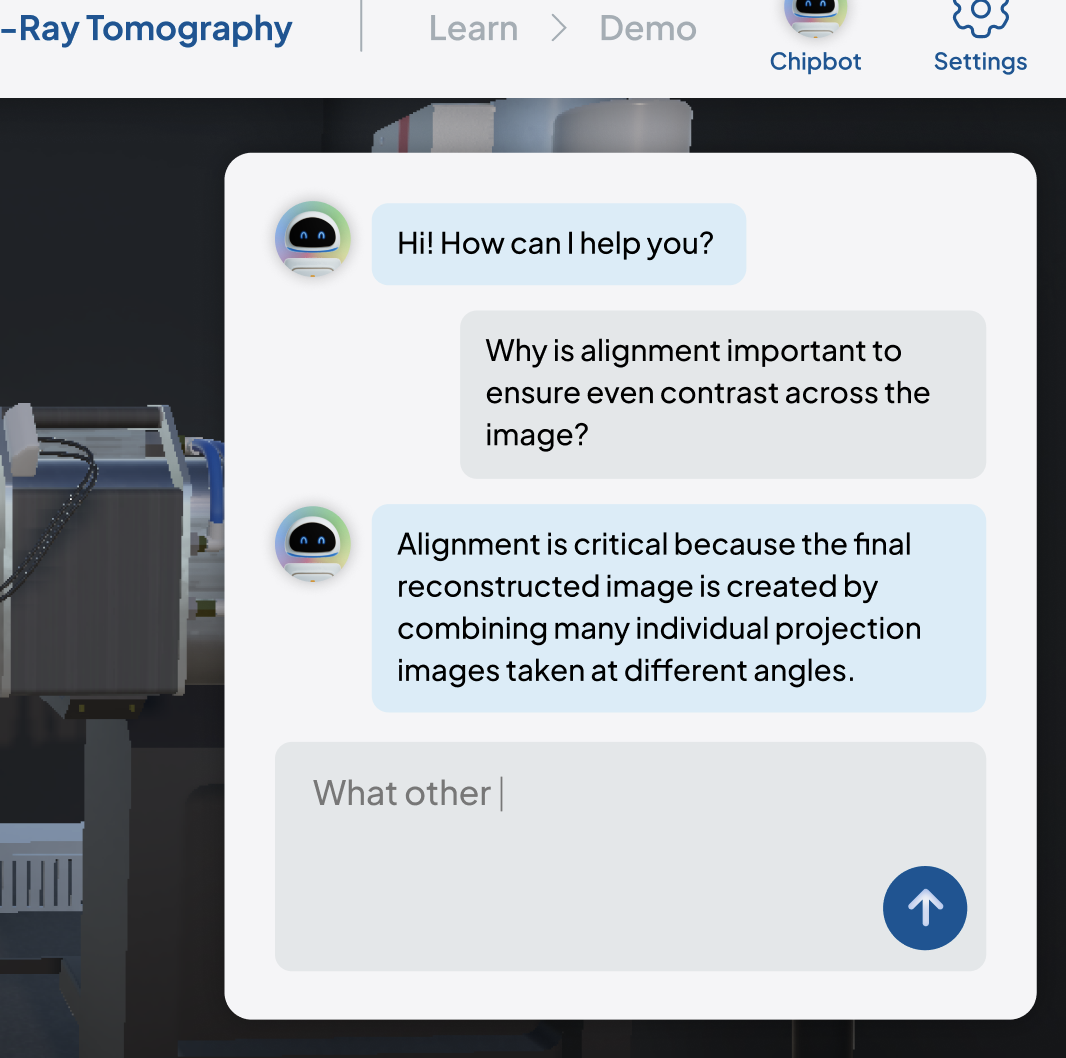

An AI teaching assistant embodied as a friendly robot provides explanations throughout the learning experience. A GPT-powered chat feature enables learners to ask questions in real time.

To evaluate and improve our virtual lab design, I conducted two rounds of user interviews combined with iterative design sessions involving science and engineering students. In the first interview, participants interacted with our virtual lab and shared their feedback by comparing it to their experiences in traditional physical labs. During the second follow-up interview with the same participants, they sketched their ideas through participatory design sessions. The interviews were transcribed and a thematic analysis was conducted with an HCI researcher. The insights and feedback gathered from these sessions was incorporated into an iterated prototype.

Participants

A total of 9 participants were recruited following the criteria: (1) engineering students interested in semiconductor topics but with no prior knowledge of the specific content presented in the prototype (representing the target learner group), or (2) engineering students with previous experience in lab-based sessions as teaching assistants.

Initial Interview Insights

Based on the initial interview findings, we decided to focus on improving the virtual tutor interface as the next design iteration step.

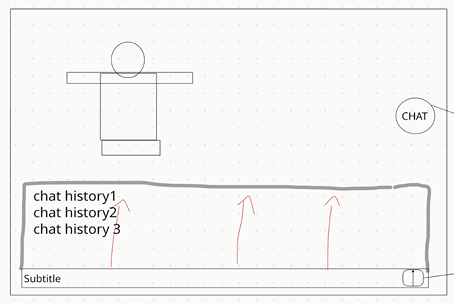

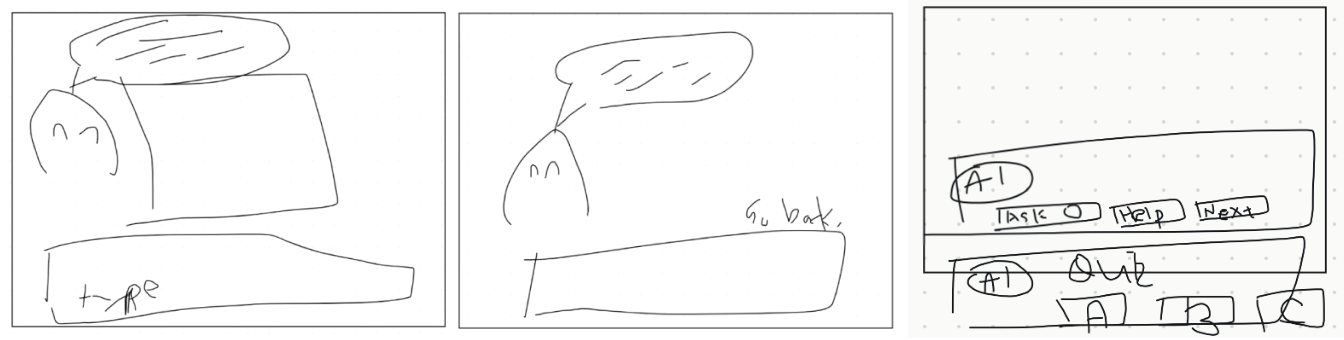

Follow-up Participatory Design Sketches

Chat History

Natural transition between Q&A and lecture

The next step will be evaluating the new interface through additional user testing. In response to our findings, we will design and incorporate a contextual AI system that delivers an adaptive and personalized learning experience. We also plan to transform the web-based version into a virtual reality experience to create more immersive interactions through embodied learning.